Glowing Bear and Weechat in Kubernetes with Helm

Canonical

on 26 October 2017

Tags: Charmed Kubernetes , conjure-up , docker , Helm , IRC , kubernetes

This article originally appeared on Mike Wilson’s blog

Having a renewed interest in irc again, I found that the best option to stay in touch is to keep Weechat running all the time and relaying the chat to Glowing Bear. This allows for notifications on my phone of mentions and being able to see missed chat when away from my computer.

What better place to deploy such a thing than Kubernetes. I recently discovered both Helm and conjure-up and I am sold on both. I’ve been hand-rolling Kubernetes and then deploying my workloads by hand-editing yaml files and then using kubectl to deploy it all. This works, but it’s time-consuming to say the least. Cluster creation is turned into a no-op with conjure-up and Helm turns deployment into a trivial task. In this entry, we’ll spin up a Kubernetes cluster on LXD and deploy Weechat and Glowing Bear to it as an example of how easy it is to work with both conjure-up and Helm.

Conjure-up with LXD

This is how to easily spin up a Kubernetes cluster on LXD with conjure-up on an Ubuntu machine.

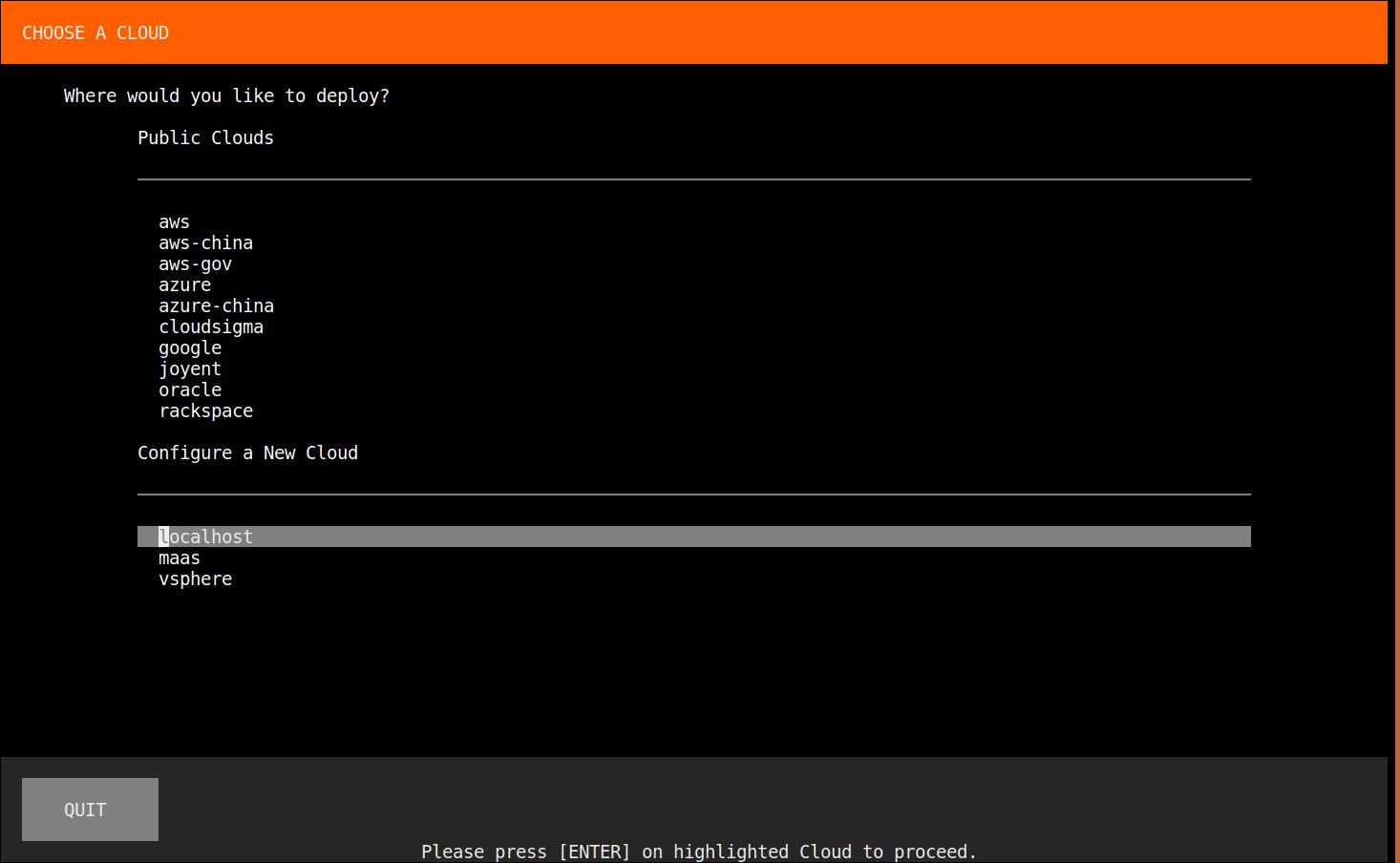

$ sudo snap install lxd $ /snap/bin/lxd init --auto $ /snap/bin/lxc network create lxdbr0 \ ipv4.address=auto ipv4.nat=true \ ipv6.address=none ipv6.nat=false $ sudo snap install conjure-up --classic $ conjure-up kubernetes-core

You might have to add yourself to the lxd group before you can run the lxd commands. Other than that possible snag though, you’re off to the races. Note, you can also `conjure-up kubernetes` if you want a full Kubernetes. At this point you should just be a couple screens away from the install happening.

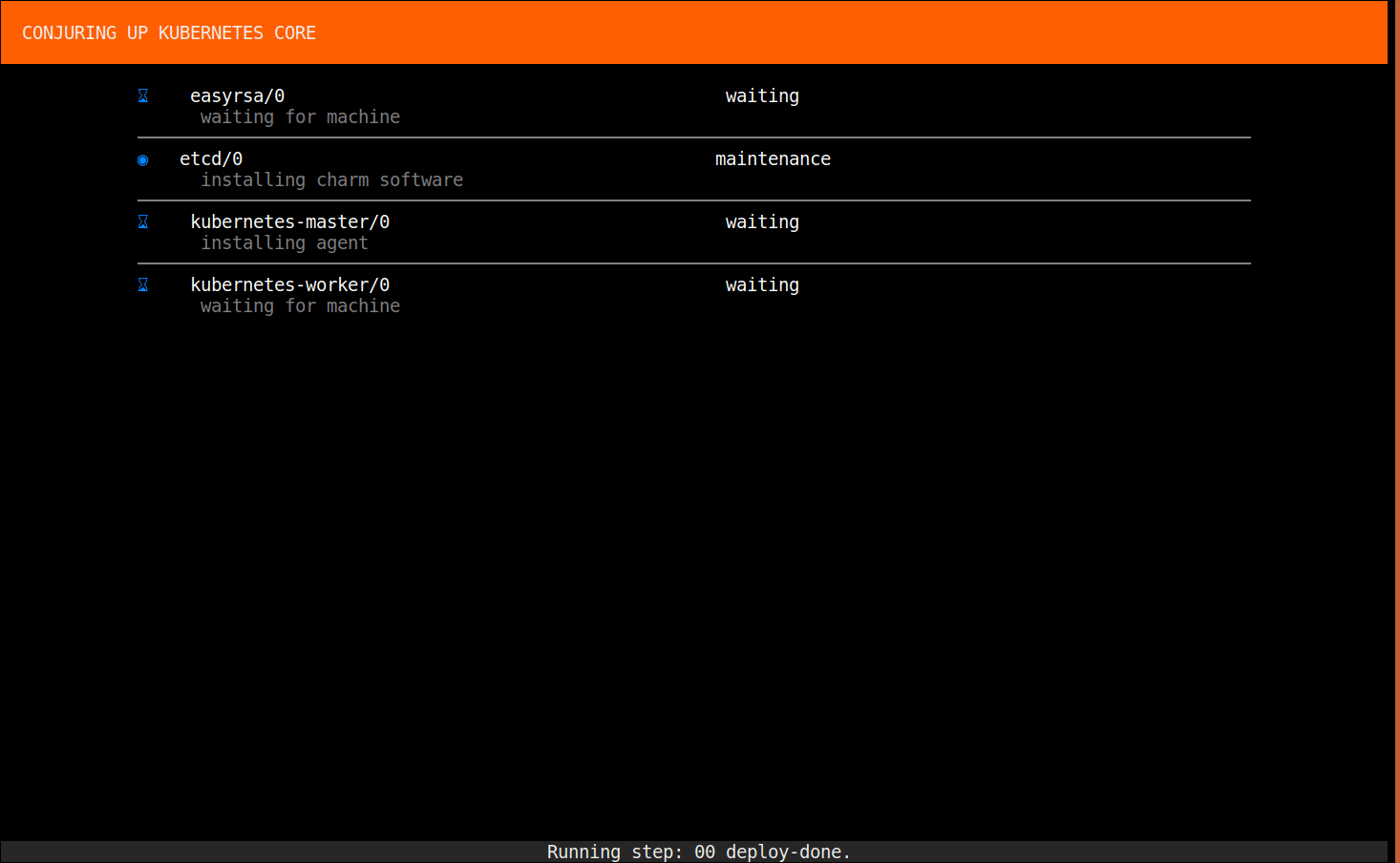

Congratulations. You now have a Kubernetes cluster up and running on your computer. It’s just that simple with conjure-up.

Helm

Helm solves lots of tedium with the templating system and it makes life much easier to deploy all but the simplest of things. My initial assumptions of Glowing Bear and how it worked were incorrect; I assumed the client/server diagram looked like this:

Incorrect Assumption

But in reality, Glowing Bear is a client-side javascript script that runs on the client and connects to the irc server from the client. This means the picture looks more like this:

How things actually work

Therefore, the Glowing Bear portion of this is mostly unnecessary unless you’re just concerned that the hosted version on the Glowing Bear page could be compromised. I was too far along to stop at this point, so I claimed that self-hosting Glowing Bear was a better solution and included it in my helm chart. We need a couple ingress rules then; one for Weechat and one for Glowing Bear. This also means you need to be aware of the security implications of opening up your Weechat to the world. You needed to be aware of it before, of course, but now you’re letting people poke two things directly.

While I am on the topic of security, let me discuss the tls portion of this. Kubernetes allows us to use ingress and something like kube-lego to automagically get certificates for our domains. I will use that here, but you have to realize what this means. You have secure traffic from the client to your ingress termination point(nginx, for example). Then it is clear text from there to the server actually running your application. This is probably fine if you’re in a physically isolated network, but if you’re on a shared tenant network, it might be something to concern yourself with.

The next step in creating our solution is to determine the images we want to use. I went to the docker hub and searched for Weechat and Glowing Bear and found someone who had made both images, had them available on github, and looked sane. I’m used those as the default images instead of trying to roll my own, because it seems silly to reinvent the wheel all the time. There are enough duplicate public images on docker hub as it is.

Now we just need to create the Helm files necessary for this. Instead of just duplicating all the files here, I’ll just link to the github repo that I created from those files. You can find it here. The hardest part is ensuring you have an existing persistent volume claim for Weechat config storage. The default is named Weechat, but that can be changed in the values.yaml file. Simply edit the yaml file if you’re interested in changing things such as not deploying Glowing Bear or the persistent volume claim name:

git clone https://github.com/hyperbolic2346/ircChart cd ircChart vi values-example.yaml helm install --name myircrelay -f values-example.yaml .

Now you have a working irc setup that is ready to store and relay irc for you. To get the relay setup, you need to find the persistent volume storage location and either hand-edit the config for Weechat or crank up a Weechat instance and change settings and then copy that config over to your persistent volume. I will show both methods:

Interactive setup

docker run -it --rm --tty -v /path/to/persistent/volume:/weechat jkaberg/weechat

Once inside your docker container:

/relay add weechat 9000 /set relay.network.password "supersecretpassword"

Hand-editing

cat << EOF >> /path/to/persistent/volume/.weechat/relay.conf [network] password = "supersecretpassword" [port] weechat = 9000 EOF

Wrapup

Kube-lego handles the TLS for us. This is another really nice package, but remember that once traffic hits the nginx proxy, the communication is no longer secured. At this point you should be able to hop on your Glowing Bear instance(or the hosted one) and use your weechat hostname and password for your ingress. Glowing Bear will then connect to your relay and you can connect to a server and join channels.

What’s the risk of unsolved vulnerabilities in Docker images?

Having a trusted source for your dependencies is critical for a secure software supply chain. How confident are you in your container security? See how Ubuntu and Canonical can help.

Newsletter signup

Related posts

Canonical announces 12 year Kubernetes LTS

Canonical’s Kubernetes LTS (Long Term Support) will support FedRAMP compliance and receive at least 12 years of committed security maintenance and enterprise...

Deploy your Spring Boot application to production

In this article we walk through the steps required to deploy a Spring Boot application to production using Juju and Kubernetes. The goal is to showcase the...

Harnessing the potential of 5G with Kubernetes: a cloud-native telco transformation perspective

Telecommunications networks are undergoing a cloud-native revolution. 5G promises ultra-fast connectivity and real-time services, but achieving those benefits...