LXD: five easy pieces

Michael Iatrou

on 26 January 2018

Tags: containers , LXD , machine containers , Ubuntu Product Month

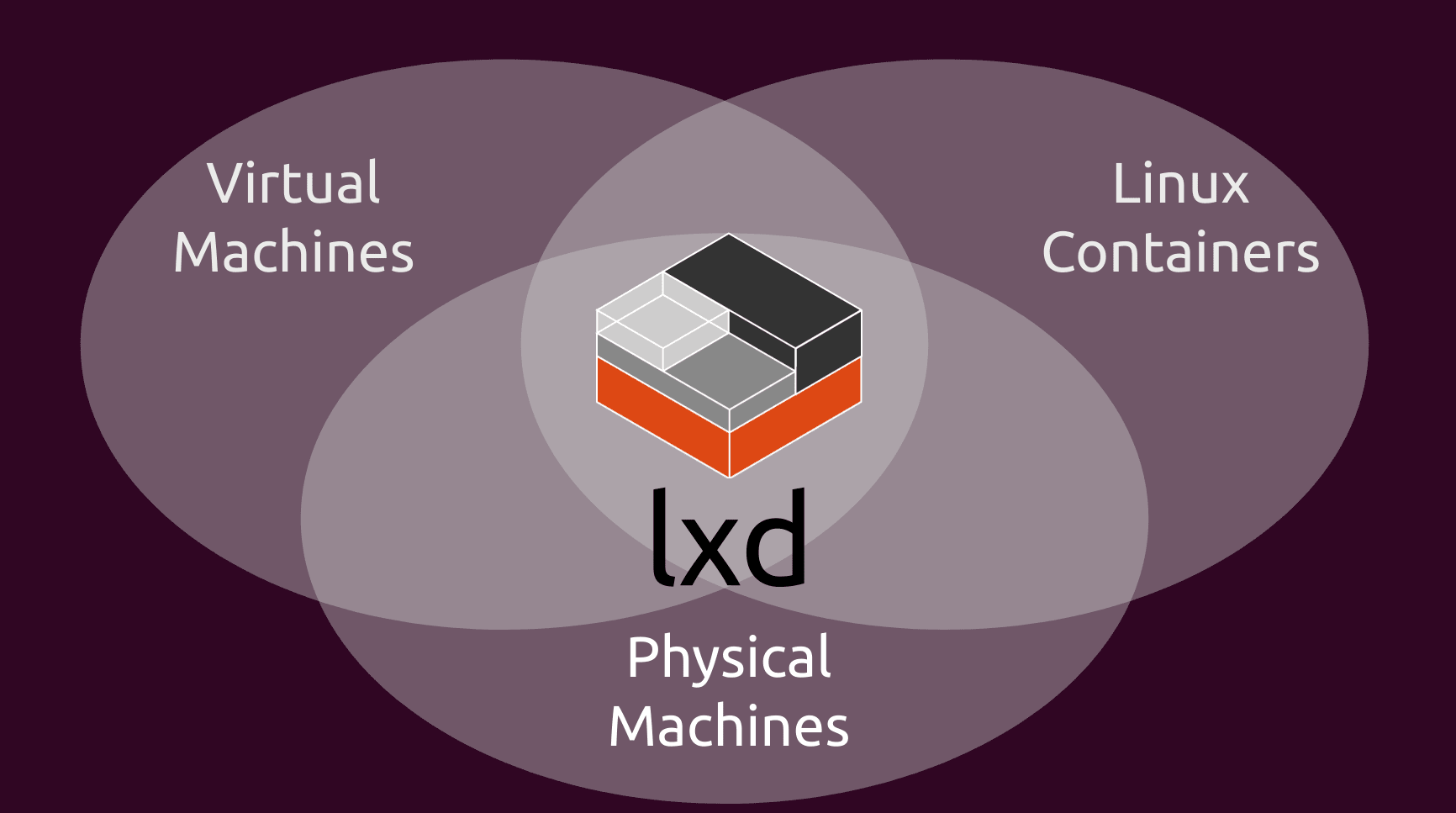

Machine containers, like LXD, proliferate in the datacenters: they provide a native control plane for OpenStack and a lightweight hypervisor for its tenants. LXD optimizes resource allocation and utilization for Kubernetes clusters, modernizes workload management in HPC infrastructure and streamlines lift and shift for legacy applications running on virtual machines or bare metal.

On the other end of the spectrum, LXD provides a great experience running on developers’ laptops, for anything from maintaining traditional monolithic applications, lightweight disposable testing environments, to developing process container encapsulated microservices. If you are taking your first steps with LXD, here are 5 simple things worth knowing:

1. Install the latest LXD

Ubuntu Xenial comes with LXD installed by default and it is fully supported for production environments for at least 5 years, as part of the LTS release. In parallel, the LXD team is quickly adding new features. If you want to see the latest and greatest, make sure you use the up to date xenial backports:

$ sudo apt install -t xenial-backports lxd lxd-client $ dpkg -l | grep lxd ii lxd 2.21-0ubuntu amd64 Container hypervisor based on LXC ii lxd-client 2.21-0ubuntu amd64 Container hypervisor based on LXC

With the packages from backports you will be able to follow closely the upstream development of LXD.

2. Use ZFS

Containers see significant benefits from a copy-on-write filesystem like ZFS. If you have a separate disk or partition, use ZFS for the LXD storage pool. Make sure you install zfsutils-linux before you initialize LXD:

$ sudo apt install zfsutils-linux $ lxd init Do you want to configure a new storage pool (yes/no) [default=yes]? Name of the new storage pool [default=default]: Name of the storage backend to use (dir, btrfs, lvm, zfs) [default=zfs]: Create a new ZFS pool (yes/no) [default=yes]? Would you like to use an existing block device (yes/no) [default=no]? yes Path to the existing block device: /dev/vdb1

Especially for launch, snapshot, restore and delete LXD operations, ZFS as a storage pool performs much better than the alternatives.

3. Get a public cloud instance on your laptop

Whether you are using AWS, Azure or GCP, named instance types are the lingua franca for resource allocation. Do you want to get a feel of how the application you develop on your laptop would behave on a cloud instance? Quickly spin up a LXD container that matches the desired cloud instance type and try it:

$ lxc launch -t m3.large ubuntu:16.04 aws-m3large

Creating aws-m3large

Starting aws-m3large

$ lxc exec aws-m3large -- grep ^processor /proc/cpuinfo | wc -l

2

$ lxc exec aws-m3large -- free -m

total used free shared buff/cache available

Mem: 7680 121 7546 209 12 7546

LXD applied resource limits to the container, to restrict CPU and RAM and provide an experience analogous to the requested instance type. Does it look like your application could benefit from additional CPU cores? LXD allows you to modify the available resources on the fly:

$ lxc config set aws-m3large limits.cpu 3 $ lxc exec aws-m3large -- grep ^processor /proc/cpuinfo | wc -l 3

Testing and adjusting quickly the container resources helps with accurate capacity planning and avoiding overprovisioning.

4. Customize containers with profiles

LXD profiles store any configuration that is associated with a container in the form of key/value pairs, or device designation. Each container can have one or more profiles applied to it. When multiple profiles are applied to a container, existing options are overridden in the order they are specified. A default profile is created automatically and it’s used implicitly for every container, unless a different one is specified.

A good example for customizing the default LXD profile is enabling public key SSH authentication. The Ubuntu LXD images have SSH enabled by default, but they do not have a password or a key. Let’s add one:

First, export the current profile:

$ lxc profile show default > lxd-profile-default.yaml

Modify the config mapping of the profile to include user.user-data as follows:

config:

user.user-data: |

#cloud-config

ssh_authorized_keys:

- @@SSHPUB@@

environment.http_proxy: ""

user.network_mode: ""

description: Default LXD profile

devices:

eth0:

nictype: bridged

parent: lxdbr0

type: nic

root:

path: /

pool: default

type: disk

name: default

used_by: []

Make sure that you have generated an SSH key pair for the current user, and then run:

$ sed -ri "s'@@SSHPUB@@'$(cat ~/.ssh/id_rsa.pub)'" lxd-profile-default.yaml

And finally, update the default profile:

$ lxc profile edit default < lxd-profile-default.yaml

You should now be able to SSH into your LXD container, as you would do with a VM. And yes, adding SSH keys barely scratches the surface of just in time configuration possibilities using cloud-init.

The LXD autostart behavior is another option to consider tuning in the default profile: out of the box, when the host machine boots, all the LXD containers are started. That is exactly the behavior you want for your servers. For your laptop though, you might want to start containers only as needed. You can configure autostart directly for the default profile, without using an intermediate YAML file for editing:

$ lxc profile set default boot.autostart 0

All existing and future containers with the default profile will be affected by this change. You can see the profiles applied to each existing container using:

$ lxc list -c ns46tSP

Familiarize with the functionality profiles offer, it’s a force multiplier for day to day activities and invaluable for structured repeatability.

5. Access the containers without port-forwarding

LXD creates an “internal” Linux bridge during its initialization (lxd init). The bridge enables an isolated layer 2 segment for the containers and the connectivity with external networks takes place on layer 3 using NATing and port-forwarding. Such behavior facilitates isolation and minimizes external exposure — both of them desirable characteristics. But because LXD containers offer machine-like operational semantics, for some use cases it’s appropriate to have LXD guests share the same network segment with their host.

Let’s see how this is easily configurable on a per container basis, using profiles. It’s assumed that on the host you have configured a bridge, named br0, with a port associated with an Ethernet interface.

We create a new LXD profile:

lxc profile create bridged

And then we map the eth0 NIC of the container to the host bridge br0:

$ cat lxd-profile-bridged.yaml

description: Bridged networking LXD profile

devices:

eth0:

name: eth0

nictype: bridged

parent: br0

type: nic

$ lxc profile edit bridged < lxd-profile-bridged.yaml

Finally, we launch a new container with both the default and the bridged profile. In the command line we specify bridged last, so it supersedes the overlaping network configuration.

$ lxc launch ubuntu:x c1 -p default -p bridged

The container will be using DHCP to retrieve an IP address, on the same subnet as the host:

$ ip a s br0

3: br0: mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether AA:BB:CC:DD:EE:FF brd ff:ff:ff:ff:ff:ff

inet 192.168.1.171/24 brd 192.168.1.255 scope global br0

valid_lft forever preferred_lft forever

$ lxc exec c1 -- ip a s eth0

101: eth0@if102: mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether 00:AA:BB:CC:DD:EE brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 192.168.1.172/24 brd 192.168.1.255 scope global eth0

valid_lft forever preferred_lft forever

Bridged networking is the simplest way to develop and test applications with

LXD in more production-like network environments. Another networking configuration alternative is macvlan but be aware of its caveats, mainly host-guest communication and hardware support limitations.

These 5 easy pieces are just the tip of the iceberg. LXD liberates your laptop from the tyranny of heavyweight virtualization and simplifies experimentation. Join the Linux Containers community to discuss your use cases and questions. Delve into the documentation for inspiration and send us PRs with your favorite new features.

LXD is the kind of tool that, after you get to know each other, using it on a daily basis feels inevitable. Give it a try!

Want to know more?

On February 7th, technical lead Stephane Graber will be presenting a webinar for Ubuntu Product Month that will dive into how LXD works, what it does, how it can be used in the enterprise, and even provide an opportunity for Q&A.

Fast, dense, and secure container and VM management at any scale

LXD brings flexible resource limits, advanced snapshot and networking support, and better security — all making for easier, leaner and more robust containerised solutions and VMs.

Newsletter signup

Related posts

Native integration available between Canonical LXD and HPE Alletra MP B10000

Native integration available between Canonical LXD and HPE Alletra MP B10000. The integration combines efficient open source virtualization with high...

Generating allow-lists with DNS monitoring on LXD

Allow-listing web traffic – blocking all web traffic that has not been pre-approved – is a common practice in highly sensitive environments. It is also a...

Native integration available for Dell PowerFlex and Canonical LXD

The integration delivers reliable, cost-effective virtualization for modern IT infrastructure Canonical, the company behind Ubuntu, has collaborated with...